Samsung's KV-SSD: An NVMe SSD with In-storage Key-value Store

Conventional Key-value Stores

Let's first see how the overall system looks like when we run a key-value

store like RocksDB on top of an SSD. Figure 1 shows the main components. At

the very top, we have the key-value application that uses the key-value API.

Next, we have a key-value store such as RocksDB. The key-value store uses

the file system managed by the OS to read/write its data. The OS uses the

SSD driver to communicate with the SSD. In a conventional SSD, the unit of

communication between the OS and the SSD is the block, i.e. OS can

write/read blocks each of which may include multiple key-value pairs.

|

| Figure 1. The software stack on top of SSDs in conventional key-value stores |

Let's consider an LSM key-value such as RocksDB. The two main data

structures are Memtable and SSTable. Memtable is in the memory and SSTables

are in the persistent storage. When we put keys to the key-value store, they

will be added to the memtable. Once the memtable reaches a certain size, it

is written to the storage as an SSTable. Of course, like always, we need a

Write-Ahead Log (WAL) that contains the updates we put into the memtable.

The WAL is on the persistent storage, so in case of a crash, we can restore

the memtable from the WAL. SSTables are immutable; whenever the memtable

reaches a certain size, we create a new SSTable and store it and we will

never change it.

Yes. That's why we need a background process that merges these SSTables.

This is called compaction.

To read a key, we first check the memtable. If the key is not there, we

check the most recent SSTable. If it is not there either, we check the

second most recent SSTable, and we continue this until we find the

key.

Can conventional key-value stores fully utilize NVMe SSDs?

So, as you can imagine, there is a non-negligible amount of computation

going on the background for a key-value store like RockDB which requires CPU

and memory resources. There are other reasons in conventional key-value

stores such as having multiple layers, resource contention, maintaining the

WAL, and read/write amplification that reduce the ability of the CPU to

issue more I/O requests to the device. Usually, with slow storage devices

like disks, the CPU doesn't become the bottleneck.

But now this is the question: with much faster storage such as NVMe SSDs,

is it still true?

The researchers at Samsung have presented some calculations and

experiments to answer this question that we review here. You can refer to

the full paper to read more [1].

Consider a modern NVMe SSD that can process 4 KB requests at the rate of 600

MB/s. To saturate such an SSD, the system needs to generate 150,000 requests

of size 4 KB per second (600 MB/s / 4 KB). That means the CPU must process

each request in less than 7 μs. If our CPU cannot do that and it processes

each request in 30 μs, we would need 4 CPUs like that to saturate our NVMe

SSD.

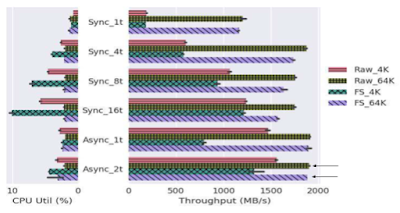

Now, let look at some experiments. They tried to saturate an NVMe SSD with a

system with Xeon E5 2.10Ghz with 48 cores. Figure 2 shows the results

for various block sizes, the number of threads, and communication mode

(sync/async). They also compare the results with and without a file system.

The left diagram shows the CPU utilization. Note that this is the total

utilization of all 48 cores. Thus, each 2.08% means 1 full CPU core.

Generally, with the asynchronous I/O and larger blocks, we can saturate the

device more easily. Thus, pay attention to the points marked by arrows.

These two points show the least amount of CPU that we need to saturate the

SSD with and without a file system. By checking these two points we can see,

in this experiment:

- At least 1 CPU is needed to be dedicated to saturate the SSD.

- We need more CPU resources to saturate the SSD when we have a file system (i.e. more layers).

|

| Figure 2. Sequential I/O benchmark with and without file system [1] |

Simplifying the Storage Stack with KV-SSD

KV-SSD lets us get rid of software components needed in conventional

key-value stores by directly providing variable size key-value API to user

applications. KV-SSD takes care of all processing required to store and

receiver key-value pairs, leaving the CPU and memory of the host machine

free. In a conventional key-value store, the storage stack must translate

key-value pairs to storage blocks before talking to the storage device. Now,

with KV-SSD we can talk in key-value pairs.

|

| Figure 3. The software stack on top of KV-SSD |

This results in a significantly smaller memory footprint. Regardless of our

keyspace,

with KV-SSD, the memory requirement of the host machine is O(1). We can estimate the memory requirement by multiplying the size of the I/Q

by the size of key-value pairs. Another important benefit is when we remove

all software resources between the application and SSD, we remove possible

sources of synchronization; suppose we have a key-value store that is using

two SSDs in the node. In a conventional key-value store, we have data

structures on tops of SSDs that are shared between these two SSDs. These

shared data structures increase the contention and are an obstacle for

scalability that we wish to achieve by adding more SSDs. On the other hand,

KV-SSDs share nothing, so we can scale easily by adding more KV-SSDs.

What we get with KV-SSD?

Various experimental results are provided in [1].

We don't want to cover all results in the post. Figure 4 is an interesting

result that shows how we can scale-in our storage node by adding more NVMe

devices. It shows the CPU utilization and the throughput of a single node

with various numbers of NVMe devices for three conventional key-value stores

namely RocksDB, RockDB-SPDK, and Aerospike along with KV-SSD. As the

number of devices increases, they run more key-value instances to saturate

the devices. As it is expected, the host system CPU usage is significantly

smaller when we use KV-SSDs compared with conventional key-value stores, as

KV-SSDs use their internal resources and require fewer computations. As the

number of devices increases, the CPU becomes saturated for conventional

key-value stores which results in a limited increase in the throughput. On

the other hand, using KV-SSDs, we can scale linearly by adding more

devices.

|

| Figure 4. KV-SSD allows us to scale linearly by adding more devices. [1] |

Figure 5 shows the write amplification while running the benchmarks. Using

KV-SSD the host-side write amplification is 1, i.e. when we want to write a

key-value pair, the host system needs only one write. This is expected as

everything is managed inside KV-SSDs. On the other hand, in conventional

key-value stores, the write amplification is higher due to compaction and

writing to the WAL. Note that KV-SSD does not write to WAL, but thanks to

battery-backed DRAM achieves the same durability as RocksDB.

|

|

Figure 5. The host-side write amplification using KD-SSD is the

optimal value of 1. [1]

|

In summary, the benefits of KV-SSD comes from the following facts:

- Key-value management is done by the storage device itself and consumes resources (CPU and DRAM) of the storage device. Thus, we can scale-in by adding more devices.

- Using KV-SSD, we have less layers, so we have less overhead.

- KV-SSDs in the node share nothing, so we don't have the contention between them. Thus, we can scale easily.

- KV-SSD removes the need for WAL while achieving the same durability guarantees of key-values stores with WAL, thanks to its battery-backed DRAM.

We didn't cover any details about KV-SSD internals. I will try to cover it

in another post.

References

[1] Kang, Yangwook, Rekha Pitchumani, Pratik Mishra, Yang-suk Kee, Francisco

Londono, Sangyoon Oh, Jongyeol Lee, and Daniel DG Lee. "Towards building a

high-performance, scale-in key-value storage system." In Proceedings of the 12th ACM International Conference on Systems and

Storage, pp. 144-154. 2019.

Comments

Post a Comment